Introduction to the Course

This course offers a comprehensive introduction to the world of Neural Networks and Deep Learning, covering key concepts, algorithms, and techniques that power modern AI systems.

Who is this course for?

- Freshers with any Graduation degree

- Professionals who want to switch from NON-IT to IT

- Professionals who want to boost there career

Requirements

Before starting this course, it is recommended that you have basic knowledge of:

- Basic understanding of Python Programming Language

- Mathematics (especially algebra and statistics).

What You'll Learn

How Neural Networks Work and Backpropagation▶

- What can Deep Learning do?

- The Rise of Deep Learning.

- The Essence of Neural Networks.

- Working with different datatypes.

- The Perceptron.

- Gradient Descent.

- The Forward Propagation.

- Backpropagation.

Loss Functions▶

- Mean Squared Error (MSE).

- L1 Loss (MAE).

- Huber Loss.

- Binary Cross Entropy Loss.

- Cross Entropy Loss.

- Softmax Function.

- KL divergence Loss.

- Contrastive Loss.

- Hinge Loss.

- Triplet Ranking Loss.

Activation Functions▶

- Why we need activation functions.

- Sigmoid Activation.

- Tanh Activation.

- ReLU and PReLU.

- Exponentially Linear Units (ELU).

- Gated Linear Units (GLU).

- Swish Activation.

- Mish Activation.

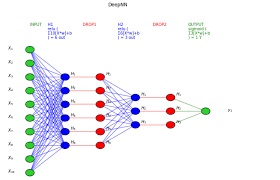

Regularization and Normalization▶

- Overfitting.

- L1 and L2 Regularization.

- Dropout.

- DropConnect.

- Normalization.

- Batch Normalization.

- Layer Normalization.

- Group Normalization.

Optimization▶

- Batch Gradient Descent.

- Stochastic Gradient Descent.

- Mini-Batch Gradient Descent.

- Exponentially Weighted Average Intuition.

- Exponentially Weighted Average Implementation.

- Bias Correction in Exponentially Weighted Averages.

- Momentum.

- RMSProp.

- Adam Optimization.

- SWATS - Switching from Adam to SGD.

- Weight Decay.

- Decoupling Weight Decay.

- AMSGrad.

Hyperparameter Tuning and Learning Rate Scheduling▶

- Introduction to Hyperparameter Tuning and Learning Rate Recap.

- Step Learning Rate Decay.

- Cyclic Learning Rate.

- Cosine Annealing with Warm Restarts.

- Batch Size vs Learning Rate.

Introduction to PyTorch ▶

- Computation Graphs and Deep Learning Frameworks.

- Installing PyTorch and an Introduction.

- How PyTorch Works.

- Torch Tensors.

- Numpy Bridge, Tensor Concatenation and Adding Dimensions.

- Automatic Differentiation.

- Loss Functions in PyTorch.

- Weight Initialization in PyTorch.

- Data Preprocessing.

- Data Normalization.

- Creating and Loading the Dataset.

- Building the Network.

- Training the Network.

- Visualize Learning.

Data Augmentation▶

- 1_Introduction to Data Augmentation.

- 2_Data Augmentation Techniques Part 1 .

- 2_Data Augmentation Techniques Part 2 .

- 2_Data Augmentation Techniques Part 3.

Implementing to Neural Networks with Numpy▶

- The Dataset and Hyperparameters.

- Understanding the Implementation.

- Forward Propagation.

- Loss Function.

- Prediction.

- Notebook for the following Lecture.

- Backpropagation Equations.

- Backpropagation.

- Initializing the Network.

- Training the Model.

Convolutional Neural Networks (CNN)▶

- Prerequisite: Filters.

- Introduction to Convolutional Networks and the need for them.

- Filters and Features.

- Convolution over Volume Animation Resource.

- Convolution over Volume Animation.

- More on Convolutions.

- Test your Understanding.

- Quiz Solution Discussion.

- A Tool for Convolution Visualization.

- Activation, Pooling and FC.

- CNN Visualization.

- Important formulas.

- CNN Characteristics.

- Regularization and Batch Normalization in CNNs.

- DropBlock: Dropout in CNNs.

- Softmax with Temperature.

CNN Architectures▶

- CNN Architectures.

- Residual Networks.

- Stochastic Depth.

- Densely Connected Networks.

- Squeeze-Excite Networks.

- Seperable Convolutions.

- Transfer Learning.

- Is a 1x1 convolutional filter equivalent to a FC layer?.

Convolutional Networks Visualization▶

- Data and the Model.

- Processing the Model.

- Visualizing the Feature Maps.

YOLO Object Detection(Theory)▶

- YOLO Theory.

Autoencoders and Variational Autoencoders▶

- Autoencoders.

- Denoising Autoencoders.

- The Problem in Autoencoders.

- Variational Autoencoders.

- Probability Distributions Recap.

- Loss Function Derivation for VAE.

- Deep Fake.

Neural Style Transfer▶

- NST Theory.

Recurrent Neural Networks (RNN)▶

- Why do we need RNNs.

- Vanilla RNNs.

- Test your understanding.

- Quiz Solution Discussion.

- Backpropagation Through Time.

- Stacked RNNs.

- Vanishing and Exploding Gradient Problem.

- LSTMs.

- Bidirectional RNNs.

- GRUs.

- CNN-LSTM.

Word Embeddings▶

- What are Word Embeddings.

- Visualizing Word Embeddings.

- Measuring Word Embeddings.

- Word Embeddings Models.

- Word Embeddings in PyTorch.

Transformers▶

- SANITY CHECK ON PREVIOUS SECTIONS.

- Introduction to Transformers.

- Input Embeddings.

- Positional Encoding.

- MultiHead Attention.

- Concat and Linear.

- Residual Learning.

- Layer Normalization.

- Feed Forward.

- Masked MultiHead Attention.

- MultiHead Attention in Decoder.

- Cross Entropy Loss.

- KL Divergence Loss.

- Label Smoothing.

- Dropout.

- Learning Rate Warmup.

BERT▶

- What is BERT and its structure.

- Masked Language Modelling.

- Next Sentence Prediction.

- Fine-tuning BERT.

- Exploring Transformers.

Other Transforms▶

- Universal Transformers.

- Visual Transformers.

GPT (Generative Pre-trained Transformer)▶

- What is GPT.

- Zero-Shot Predictions with GPT.

- Byte-Pair Encoding.

- Technical Details of GPT.

- Playing with HuggingFace models.

- Implementation.

Trainer Expertise

This program is monitored by a team of professionals. We have crafted this program using the learnings of 23+ years of experience handling corporate training and job oriented training. Our students are working in almost all top MNCs across India.

Job Opportunities

100% placement record — each student successfully transitioned into a desired Machine Learning career role.

Course Duration

16 Weeks

Fees

Training + Job Assistance: ₹35,000

- Admission: ₹10,000

- After 1 month: ₹25,000